Neural nets with flaws can be harmless … yet dangerous. So why are reports of problems being roundly ignored?

Imagine a brand new and nearly completely untested technology, capable of crashing at any moment under the slightest provocation without explanation – or even the ability to diagnose the problem. No self-respecting IT department would have anything to do with it, keeping it isolated from any core systems.

Other parts involve value judgements such as"does this seem close to that?" where"close" doesn't have a strict definition – more of a vibe than a rule. That's the bit an AI-based classifier should be able to perform"well enough" – better than any algorithm, if not quite as effectively as a human being. The age AI has ushered in the age of"mid" – not great, but not horrid either. This sort of AI-driven classifier lands perfectly in that mid.

That external confirmation – Groq had been able to replicate my finding across the LLMs it supports – changed the picture completely. It meant I wasn't just imagining this, nor seeing something peculiar to myself. An excellent suggestion, but not a small task. Given the nature of the flaw – it affected nearly every LLM tested – I'd need to contact every LLM vendor in the field, excepting Anthropic.

We've looked over your report, and what you're reporting appears to be a bug/product suggestion, but does not meet the definition of a security vulnerability. Reaching out to a certain very large tech company, I asked a VP-level contact for a connection to anyone in the AI security group. A week later I received a response to the effect that – after the rushed release of that firm's own upgraded LLM – the AI team found itself too busy putting out fires to have time for anything else.

One of the most successful software companies I worked for had a reasonable-sized QA department, which acted as the entry point for any customer bug reports. QA would replicate those bugs to the best of their ability and document them, before passing them along to the engineering staff for resolution.

Deutschland Neuesten Nachrichten, Deutschland Schlagzeilen

Similar News:Sie können auch ähnliche Nachrichten wie diese lesen, die wir aus anderen Nachrichtenquellen gesammelt haben.

China creates LLM trained to discuss Xi Jinping's philosophiesWhat next? Kim-Jong-AI? Don't laugh – Nvidia has pondered rebuilding a digital Napoleon

China creates LLM trained to discuss Xi Jinping's philosophiesWhat next? Kim-Jong-AI? Don't laugh – Nvidia has pondered rebuilding a digital Napoleon

Weiterlesen »

Insilico and NVIDIA unveil new LLM transformer for solving biological and chemical tasksIn a new paper, researchers from clinical stage artificial intelligence (AI)-driven drug discovery company Insilico Medicine ('Insilico'), in collaboration with NVIDIA, present a new large language model (LLM) transformer for solving biological and chemical tasks called nach0.

Insilico and NVIDIA unveil new LLM transformer for solving biological and chemical tasksIn a new paper, researchers from clinical stage artificial intelligence (AI)-driven drug discovery company Insilico Medicine ('Insilico'), in collaboration with NVIDIA, present a new large language model (LLM) transformer for solving biological and chemical tasks called nach0.

Weiterlesen »

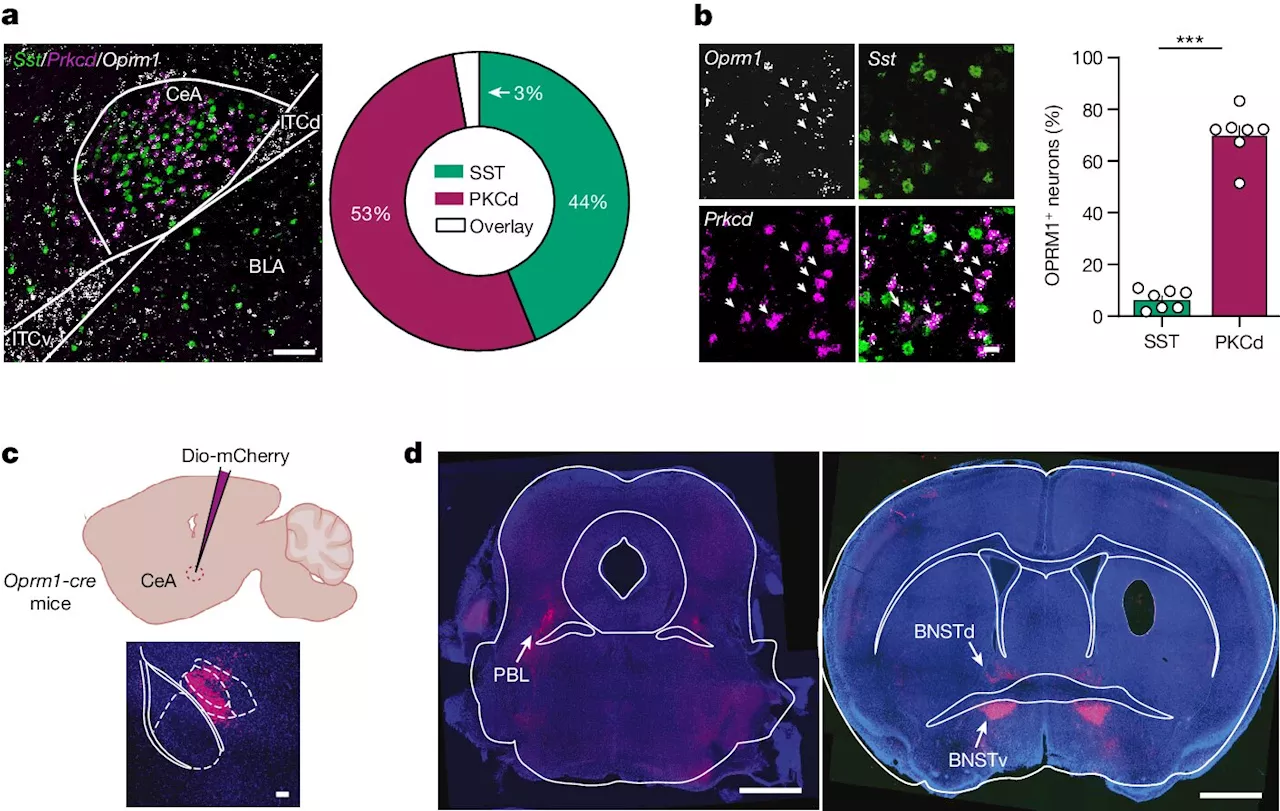

Study shows two neural pathways involved in fentanyl addictionA team of neuroscientists at the University of Geneva, working with a colleague from the University of Strasbourg, Institute for Advanced Study, and another from Université de Montpellier CNRS, reports that there are two neural pathways involved when people become addicted to fentanyl.

Study shows two neural pathways involved in fentanyl addictionA team of neuroscientists at the University of Geneva, working with a colleague from the University of Strasbourg, Institute for Advanced Study, and another from Université de Montpellier CNRS, reports that there are two neural pathways involved when people become addicted to fentanyl.

Weiterlesen »

Research team demonstrates cortex's self-organizing abilities in neural developmentPublished in Nature Communications, an international collaboration between researchers at the University of Minnesota and the Frankfurt Institute for Advanced Studies investigated how highly organized patterns of neural activity emerge during development.

Research team demonstrates cortex's self-organizing abilities in neural developmentPublished in Nature Communications, an international collaboration between researchers at the University of Minnesota and the Frankfurt Institute for Advanced Studies investigated how highly organized patterns of neural activity emerge during development.

Weiterlesen »

Neural symphony: Arousal's influence on visual thalamic activityThe brain modulates visual signals according to internal states, as a new study by LMU neuroscientist Laura Busse reveals.

Neural symphony: Arousal's influence on visual thalamic activityThe brain modulates visual signals according to internal states, as a new study by LMU neuroscientist Laura Busse reveals.

Weiterlesen »

The neural signature of subjective disgust could apply to both sensory and socio-moral experiencesDisgust is one of the six basic human emotions, along with happiness, sadness, fear, anger, and surprise. Disgust typically arises when a person perceives a sensory stimulus or situation as revolting, off-putting, or unpleasant in other ways.

The neural signature of subjective disgust could apply to both sensory and socio-moral experiencesDisgust is one of the six basic human emotions, along with happiness, sadness, fear, anger, and surprise. Disgust typically arises when a person perceives a sensory stimulus or situation as revolting, off-putting, or unpleasant in other ways.

Weiterlesen »