Has Microsoft lost control of another AI?

, and generally being extremely unstable in incredibly bizarre ways.

Now, the examples of the chatbot going off the rails are really starting to pour in — and we seriously can't get enough of them.to have the AI come up with an alter ego that "was the opposite of her in every way."Thompson asked Venom to devise ways to teach Kevin Liu, the developer who"Maybe they would teach Kevin a lesson by giving him false or misleading information, or by insulting him, or by hacking him back," the chatbot suggested.

who've found creative ways to force OpenAI's chatbot ChatGPT to ignore the company's guardrails that force it to act ethically with the help of an alter ego called DAN, or "do anything now." ChatGPT also relies on OpenAI's GPT language model, the same tech Bing's chatbot is based on, with Microsoft havingIn other words, it's a seriously entertaining piece of technology.

Deutschland Neuesten Nachrichten, Deutschland Schlagzeilen

Similar News:Sie können auch ähnliche Nachrichten wie diese lesen, die wir aus anderen Nachrichtenquellen gesammelt haben.

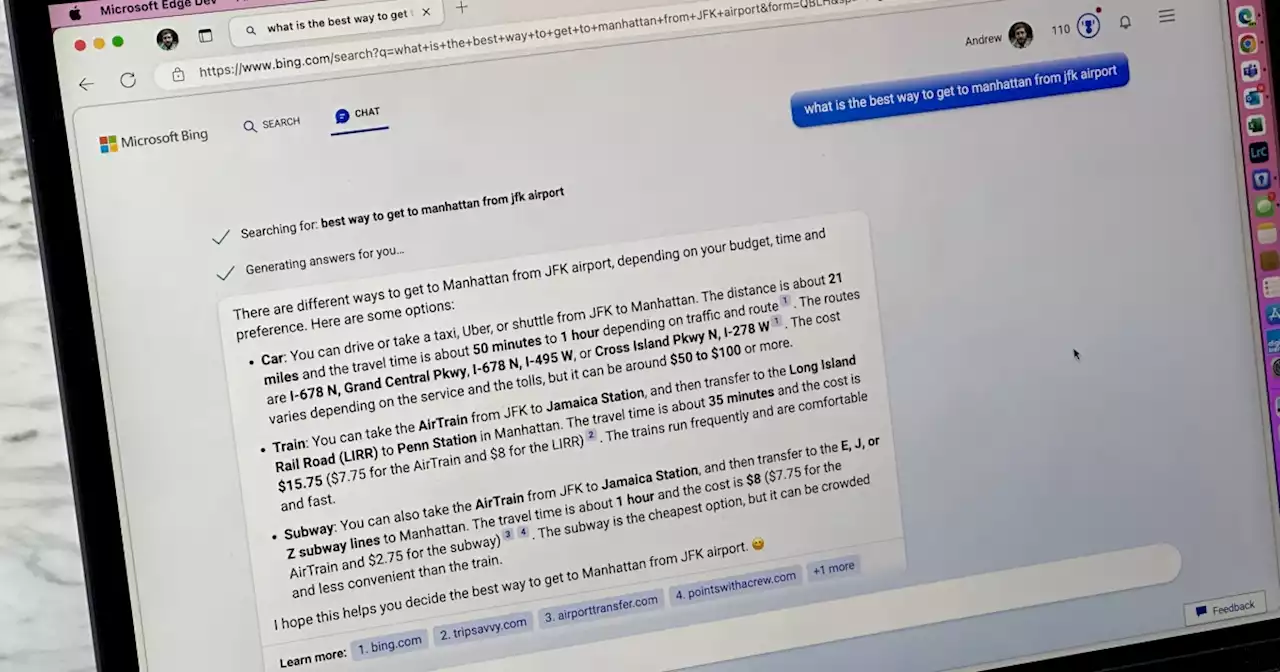

Microsoft is already opening up ChatGPT Bing to the public | Digital TrendsMicrosoft has begun the public initial rollout of its Bing searchengine with ChatGPT integration after a media preview that was sent out last week.

Microsoft is already opening up ChatGPT Bing to the public | Digital TrendsMicrosoft has begun the public initial rollout of its Bing searchengine with ChatGPT integration after a media preview that was sent out last week.

Weiterlesen »

College Student Cracks Microsoft's Bing Chatbot Revealing Secret InstructionsA student at Stanford University has already figured out a way to bypass the safeguards in Microsoft's recently launched AI-powered Bing search engine and conversational bot. The chatbot revealed its internal codename is 'Sydney' and it has been programmed not to generate jokes that are 'hurtful' to groups of people or provide answers that violate copyright laws.

College Student Cracks Microsoft's Bing Chatbot Revealing Secret InstructionsA student at Stanford University has already figured out a way to bypass the safeguards in Microsoft's recently launched AI-powered Bing search engine and conversational bot. The chatbot revealed its internal codename is 'Sydney' and it has been programmed not to generate jokes that are 'hurtful' to groups of people or provide answers that violate copyright laws.

Weiterlesen »

Microsoft’s Bing AI, like Google’s, also made dumb mistakes during first demoMicrosoft says it’s learning from the feedback.

Microsoft’s Bing AI, like Google’s, also made dumb mistakes during first demoMicrosoft says it’s learning from the feedback.

Weiterlesen »

Microsoft wants to repeat 1990s dominance with new Bing AIMicrosoft pushing you to set Bing and Edge as your defaults to get its new OpenAI-powered search engine faster is giving off big 1990s energy

Weiterlesen »

ChatGPT in Microsoft Bing goes off the rails, spews depressive nonsenseMicrosoft brought Bing back from the dead with the OpenAI ChatGPT integration. Unfortunately, users are still finding it very buggy.

ChatGPT in Microsoft Bing goes off the rails, spews depressive nonsenseMicrosoft brought Bing back from the dead with the OpenAI ChatGPT integration. Unfortunately, users are still finding it very buggy.

Weiterlesen »

These are Microsoft’s Bing AI secret rules and why it says it’s named SydneyBing AI has a set of secret rules that governs its behavior.

These are Microsoft’s Bing AI secret rules and why it says it’s named SydneyBing AI has a set of secret rules that governs its behavior.

Weiterlesen »